Generative AI, Apps, and DevOps

Join us on October 19, 2023 at the Pulumi Office (Seattle) from 5 - 8:30 pm PDT for talks and a workshop about AI/ML

Artificial Intelligence (AI) and Machine Learning (ML) Tech Talks

Developers and DevOps professionals, meet us at the Pulumi HQ to learn how to develop generative AI/ML applications using the Langchain Python library. Together, we will walk through the foundational concepts of the new generative domain, highlighting dev, sec, and ops along the way.

Join us at our Seattle office to take advantage of this chance to network with fellow developers, learn about the latest AI/ML trends, and boost your skills.

Food provided by Pulumi. Drinks provided by Tessell.

Guest Speakers

Patrick Debois

VP of Engineering, Showpad

Todd Ebersviller

SVP Site Reliability Engineering, LivePerson

Andre Elizondo

Technical Sales Leader, Tessell

Ken Mugrage

Principal Technologist, ThoughtWorks

Agenda

- 5:00 pm - Doors open & Networking

- 6:30 pm - Lightning Talks

- About AI & Software Delivery by Ken Mugrage

- Just Enough Data Science for AI: DevOps to MLOps by Andre Elizondo

- Cloud orchestration for AI scale apps by Kat Morgan

- Drinking Your Own Champagne (LLM and Generative AI) by Todd Ebersviller

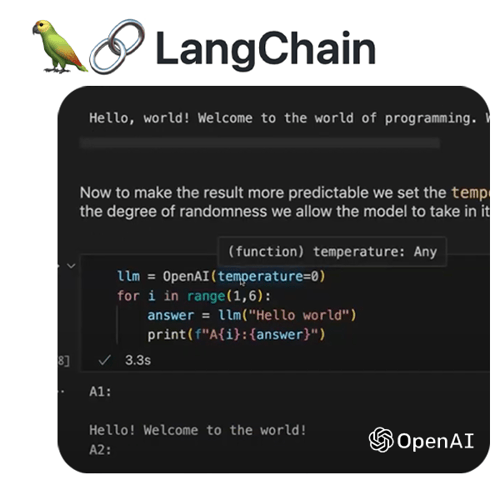

- 7:10 pm - Workshop: "Dev, Sec & Ops meet Langchain" by Patrick Debois

- 7:50 pm - more networking!

WORKSHOP

Dev, Sec & Ops meet Langchain

The emergence of LLMs and generative AI have added new exciting toys to our toolbelt for writing applications. Using code examples from the popular framework Langchain, I'll walk you through the concepts of this new domain and highlight dev & sec.& ops aspects along the way. With this, Patrick Debois, hopes to give you a peek under the hood and demystify the magic.

Lessons include, but not limited to:

Developers

- Calling a simple LLM using OpenAI

- Looking at debugging in Langchain

- Chatting with OpenAI as model

- Loading embeddings and documents in a vector database

- Show the use of OpenAI documentation to have the llm generate calls to find real-time information

Operations

- Find out how much tokens you are using and the cost

- How to cache your calls to an LLM using exact matching or embeddings

- Track your calls and log them to a file (using a callback handler)

- Impose output structure (as JSON) and have the LLM retry if it's not correct

Security

- Explain the OWASP top 10 for LLMS

- Show how simple prompt injection works and some mitigation strategies

- Check the answer llms provide and reflect if it ok

- Use a Hugging Face model to detect if an LLM output was toxic

Join the AI/ML Talks to Learn:

- How to use Langchain to build AI capabilities in your programs

- How to use real programming languages for your AI/ML Operations deployment

- How to assess and prioritize which AI/ML capabilities to prioritize for your use cases

- How to adapt to new Machine Learning feature development